Your self hosted YouTube media server

Table of contents:

- Wiki for a detailed documentation

- Core functionality

- Screenshots

- Problem Tube Archivist tries to solve

- Installing and updating

- Getting Started

- Potential pitfalls

- Roadmap

- Known limitations

- Donate

Core functionality

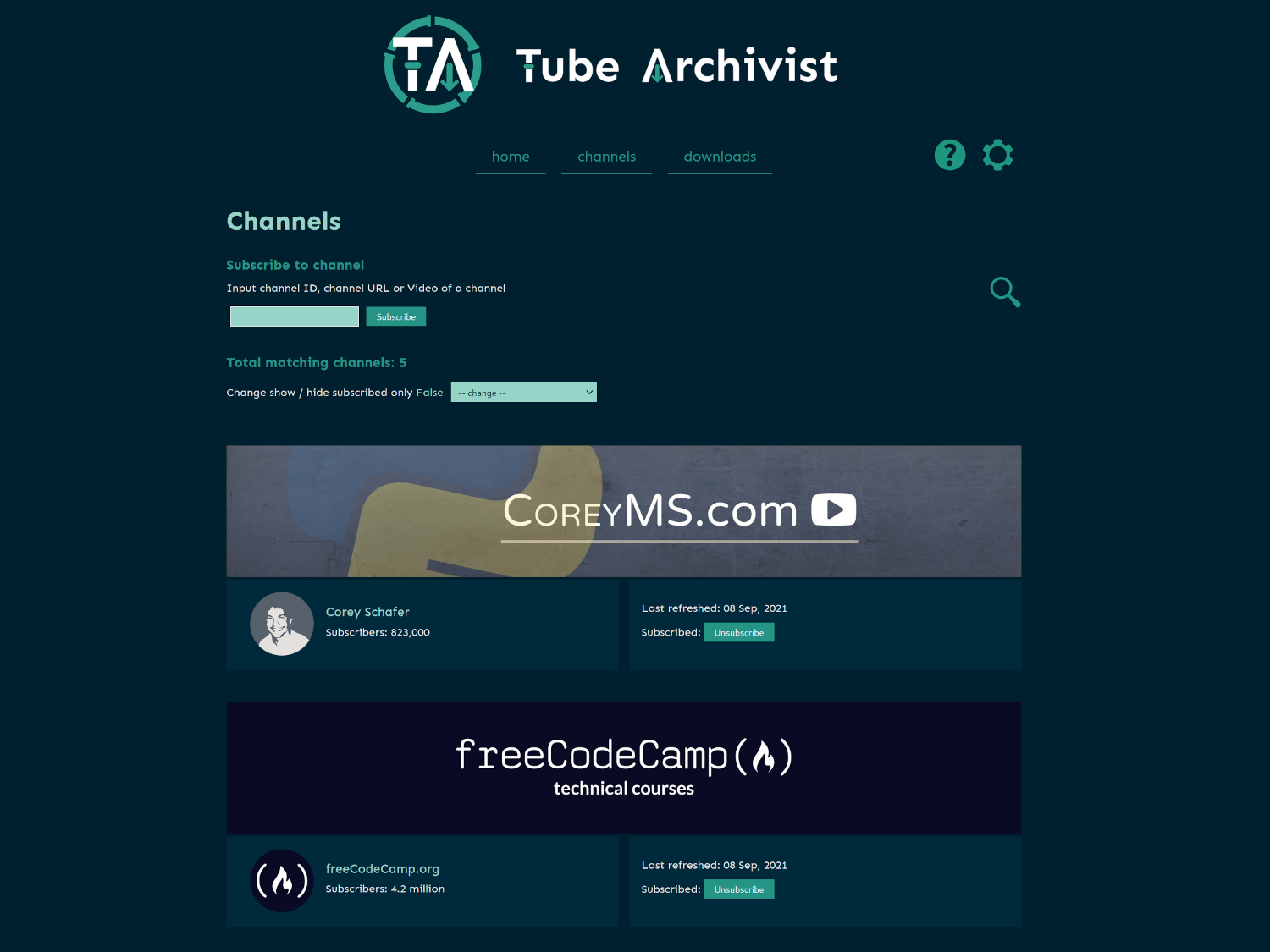

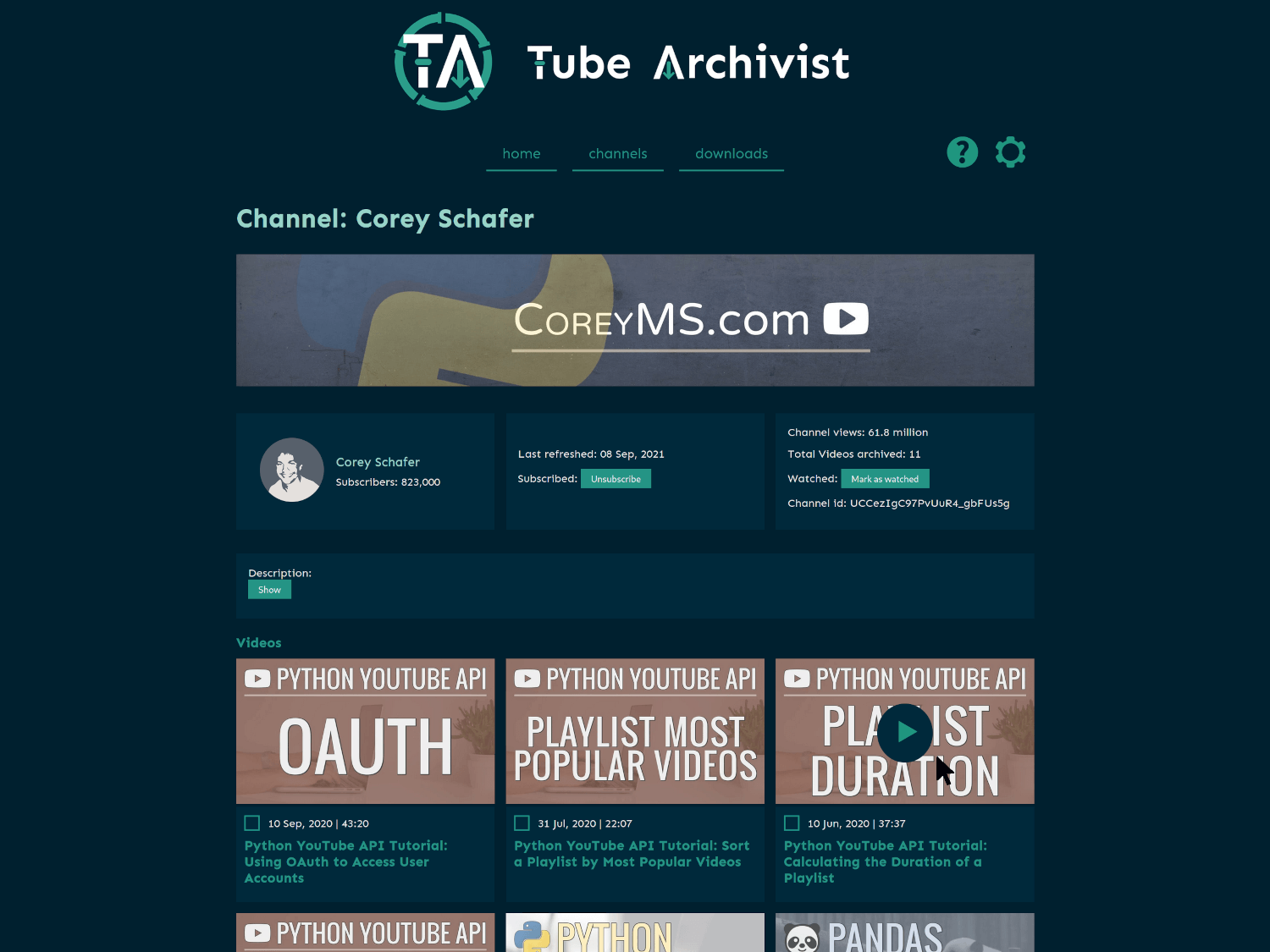

- Subscribe to your favorite YouTube channels

- Download Videos using yt-dlp

- Index and make videos searchable

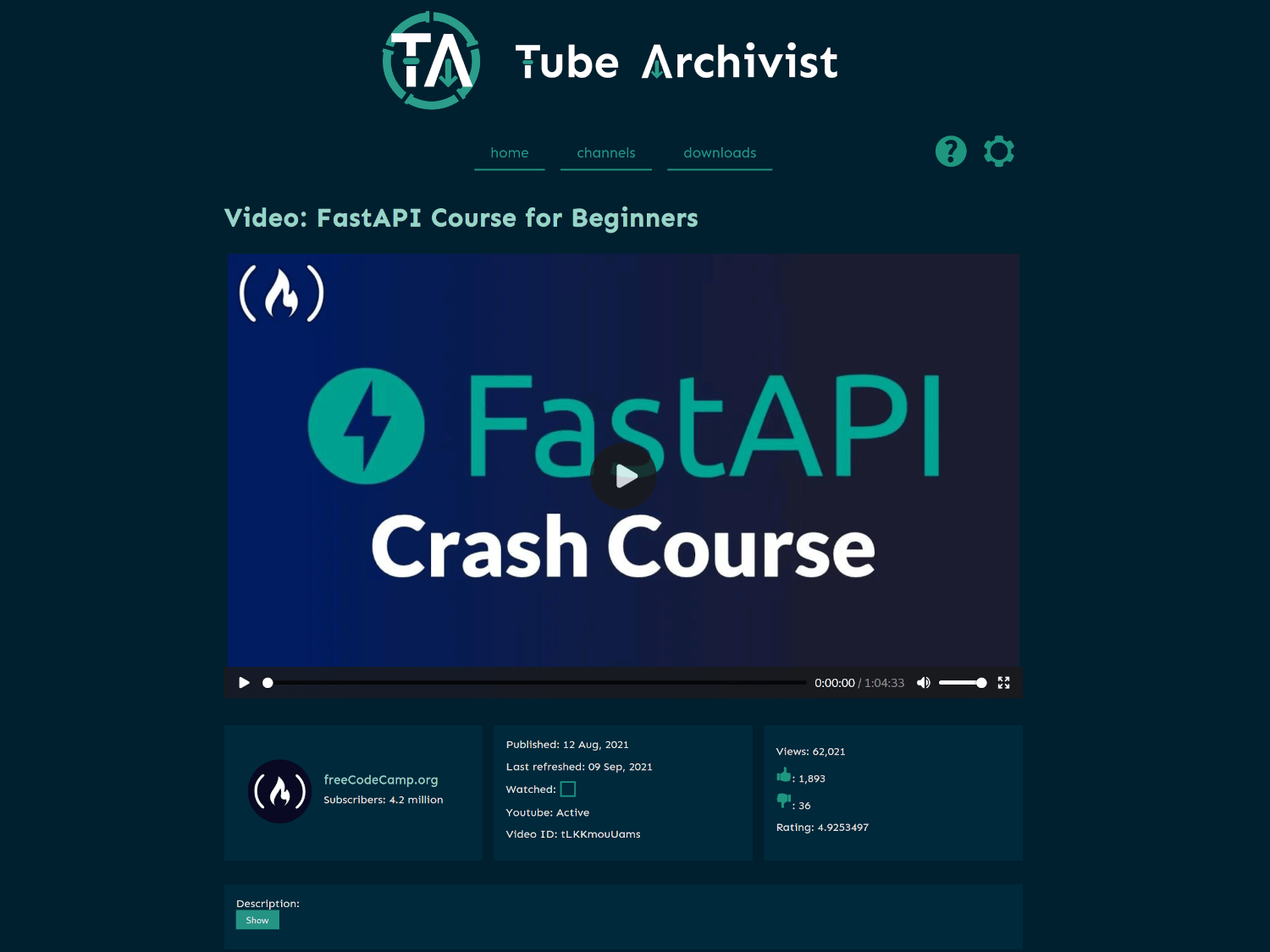

- Play videos

- Keep track of viewed and unviewed videos

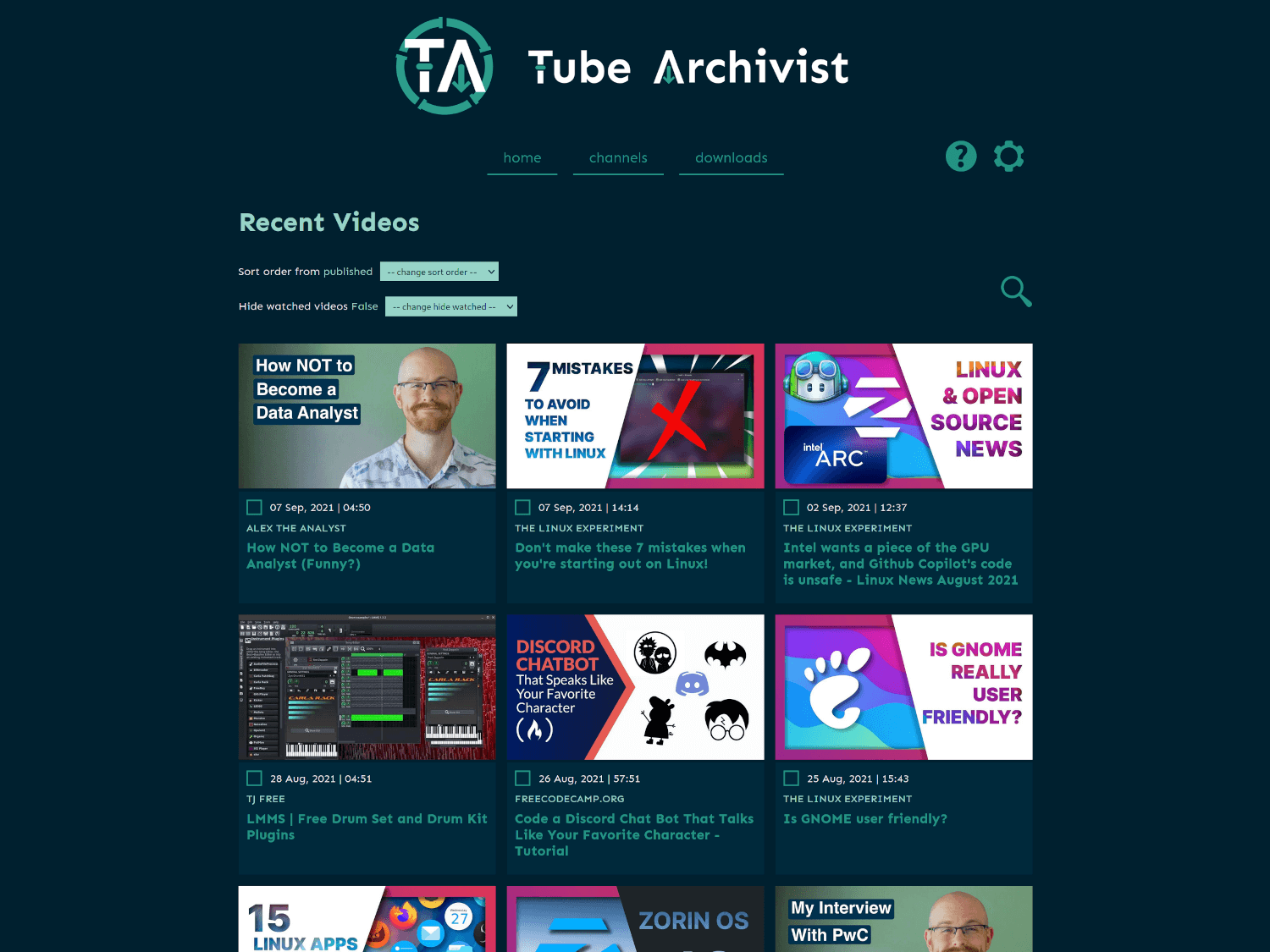

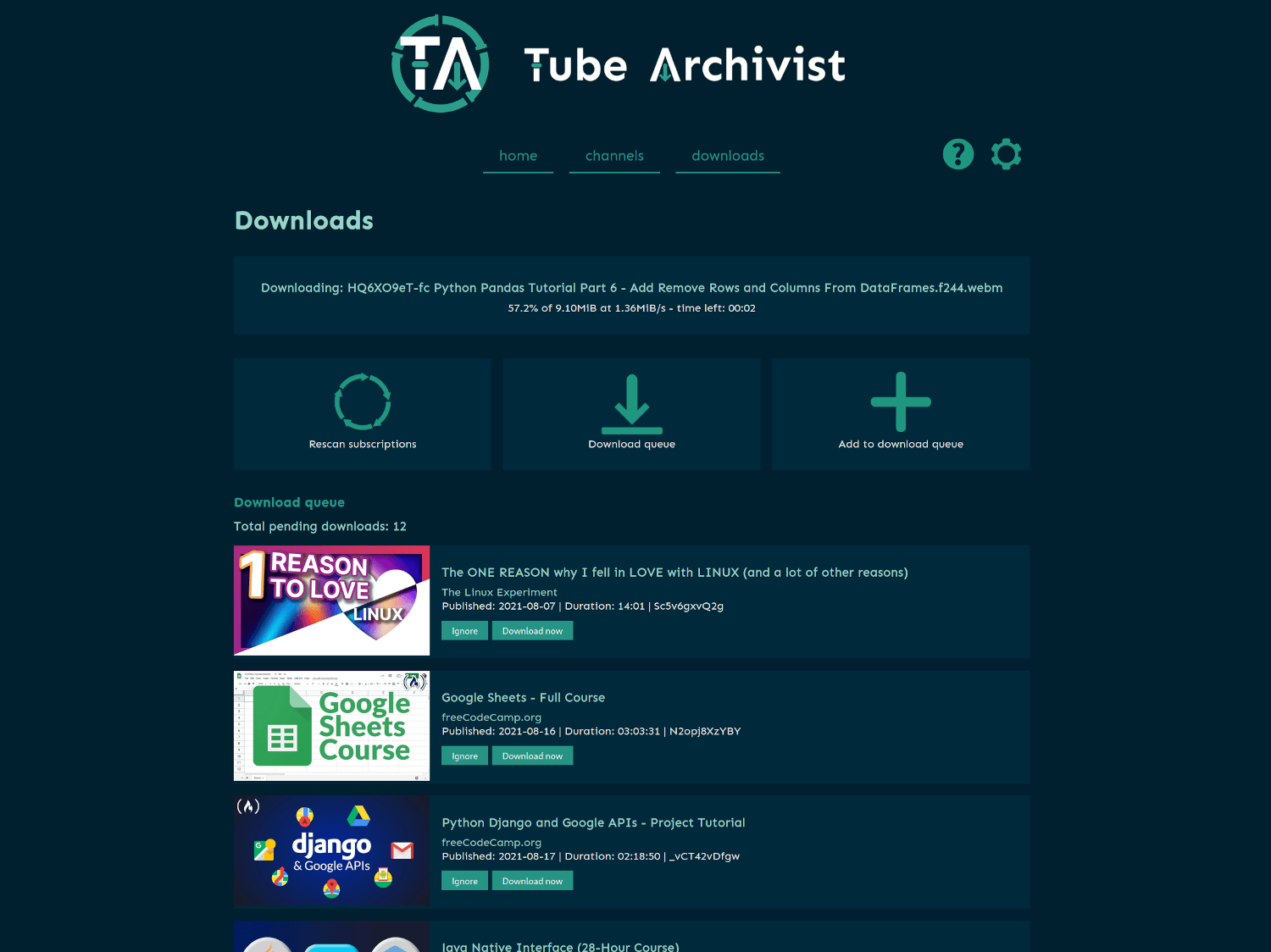

Screenshots

Problem Tube Archivist tries to solve

Once your YouTube video collection grows, it becomes hard to search and find a specific video. That's where Tube Archivist comes in: By indexing your video collection with metadata from YouTube, you can organize, search and enjoy your archived YouTube videos without hassle offline through a convenient web interface.

Installing and updating

Take a look at the example docker-compose.yml file provided. Tube Archivist depends on three main components split up into separate docker containers:

Tube Archivist

The main Python application that displays and serves your video collection, built with Django.

- Serves the interface on port

8000 - Needs a volume for the video archive at /youtube

- And another volume to save application data at /cache.

- The environment variables

ES_URLandREDIS_HOSTare needed to tell Tube Archivist where Elasticsearch and Redis respectively are located. - The environment variables

HOST_UIDandHOST_GIDallows Tube Archivist tochownthe video files to the main host system user instead of the container user. Those two variables are optional, not setting them will disable that functionality. That might be needed if the underlying filesystem doesn't supportchownlike NFS. - Change the environment variables

TA_USERNAMEandTA_PASSWORDto create the initial credentials. ELASTIC_PASSWORDis for the password for Elasticsearch. The environment variableELASTIC_USERis optional, should you want to change the username from the default elastic.

Elasticsearch

Stores video meta data and makes everything searchable. Also keeps track of the download queue.

- Needs to be accessible over the default port

9200 - Needs a volume at /usr/share/elasticsearch/data to store data

Follow the documentation for additional installation details.

Redis JSON

Functions as a cache and temporary link between the application and the file system. Used to store and display messages and configuration variables.

- Needs to be accessible over the default port

6379 - Needs a volume at /data to make your configuration changes permanent.

Redis on a custom port

For some architectures it might be required to run Redis JSON on a nonstandard port. To for example change the Redis port to 6380, set the following values:

- Set the environment variable

REDIS_PORT=6380to the tubearchivist service. - For the archivist-redis service, change the ports to

6380:6380 - Additionally set the following value to the archivist-redis service:

command: --port 6380 --loadmodule /usr/lib/redis/modules/rejson.so

Updating Tube Archivist

You will see the current version number of Tube Archivist in the footer of the interface so you can compare it with the latest release to make sure you are running the latest and greatest.

- There can be breaking changes between updates, particularly as the application grows, new environment variables or settings might be required for you to set in the your docker-compose file. Always check the release notes: Any breaking changes will be marked there.

- All testing and development is done with the Elasticsearch version number as mentioned in the provided docker-compose.yml file. This will be updated when a new release of Elasticsearch is available. Running an older version of Elasticsearch is most likely not going to result in any issues, but it's still recommended to run the same version as mentioned.

Alternative installation instructions:

- arm64: The Tube Archivist container is multi arch, so is Elasticsearch. RedisJSON doesn't offer arm builds, you can use

bbilly1/rejson, an unofficial rebuild for arm64. - Synology: There is a discussion thread with Synology installation instructions.

- Unraid: The three containers needed are all in the Community Applications. First install

TubeArchivist RedisJSONfollowed byTubeArchivist ES, and finally you can installTubeArchivist. If you have unraid specific issues, report those to the support thread.

Potential pitfalls

vm.max_map_count

Elastic Search in Docker requires the kernel setting of the host machine vm.max_map_count to be set to at least 262144.

To temporary set the value run:

sudo sysctl -w vm.max_map_count=262144

To apply the change permanently depends on your host operating system:

- For example on Ubuntu Server add

vm.max_map_count = 262144to the file /etc/sysctl.conf. - On Arch based systems create a file /etc/sysctl.d/max_map_count.conf with the content

vm.max_map_count = 262144. - On any other platform look up in the documentation on how to pass kernel parameters.

Permissions for elasticsearch

If you see a message similar to AccessDeniedException[/usr/share/elasticsearch/data/nodes] when initially starting elasticsearch, that means the container is not allowed to write files to the volume.

That's most likely the case when you run docker-compose as an unprivileged user. To fix that issue, shutdown the container and on your host machine run:

chown 1000:0 /path/to/mount/point

This will match the permissions with the UID and GID of elasticsearch within the container and should fix the issue.

Disk usage

The Elasticsearch index will turn to read only if the disk usage of the container goes above 95% until the usage drops below 90% again. Similar to that, TubeArchivist will become all sorts of messed up when running out of disk space. There are some error messages in the logs when that happens, but it's best to make sure to have enough disk space before starting to download.

Getting Started

- Go through the settings page and look at the available options. Particularly set Download Format to your desired video quality before downloading. Tube Archivist downloads the best available quality by default. To support iOS or MacOS and some other browsers a compatible format must be specified. For example:

bestvideo[VCODEC=avc1]+bestaudio[ACODEC=mp4a]/mp4

- Subscribe to some of your favorite YouTube channels on the channels page.

- On the downloads page, click on Rescan subscriptions to add videos from the subscribed channels to your Download queue or click on Add to download queue to manually add Video IDs, links, channels or playlists.

- Click on Start download and let Tube Archivist to it's thing.

- Enjoy your archived collection!

Roadmap

This should be considered as a minimal viable product, there is an extensive list of future functions and improvements planned.

Functionality

- Auto rescan and auto download on a schedule

- User roles

- Podcast mode to serve channel as mp3

- Implement PyFilesystem for flexible video storage

- Optional automatic deletion of watched items after a specified time

- Subtitle download & indexing

- Create playlists [2021-11-27]

- Access control [2021-11-01]

- Delete videos and channel [2021-10-16]

- Add thumbnail embed option [2021-10-16]

- Un-ignore videos [2021-10-03]

- Dynamic download queue [2021-09-26]

- Backup and restore [2021-09-22]

- Scan your file system to index already downloaded videos [2021-09-14]

UI

- Fancy advanced unified search interface

- Show similar videos on video page

- Multi language support

- Show total video downloaded vs total videos available in channel

- Grid and list view for both channel and video list pages [2021-10-03]

- Create a github wiki for user documentation [2021-10-03]

Known limitations

- Video files created by Tube Archivist need to be mp4 video files for best browser compatibility.

- Every limitation of yt-dlp will also be present in Tube Archivist. If yt-dlp can't download or extract a video for any reason, Tube Archivist won't be able to either.

- For now this is meant to be run in a trusted network environment. Not everything is properly authenticated.

- There is currently no flexibility in naming of the media files.

Donate

The best donation to Tube Archivist is your time, take a look at the contribution page to get started.

Second best way to support the development is to provide for caffeinated beverages:

- Paypal.me for a one time coffee

- Paypal Subscription for a monthly coffee

- co-fi.com for an alternative platform